How to deliver code efficiently in a startup

During my time working for startups I learned that priorities change way faster than anyone expects. It can take a form of strategic pivot, or a request from sales like “can we close feature A, before B, because it will help us reach sales target for this quarter?”. However being a one man army (or even 1/nth of the army) is a resource scarce situation. It requires the skeleton team to reconcile feature delivery with production support and incidents handling. When there is an error in an email sending service, sending hundreds of emails instead of one, or a lambda miss-configuration causing an infinite (expensive) loop, it is also a priority switch. Very urgent one.

How can an engineer stay productive and continuously deliver in these conditions? With the good old “divide and conquer” strategy and a few other useful and known practices.

Divide and conquer

I heard often from senior engineers, management and a lot of YouTube lecturers a number of versions of: “an engineer should split their work into small manageable chunks”, but what does that mean in practice? How to measure it? When is the chunk small enough?

I would like to propose a heuristic that a manageable chunk of code is something that can be deployed to production in a matter of hours, maintaining all the processes and security checks (no cheating!). Therefore it can be also easily rolled back.

One of the processes that I would like to highlight here is peer review in the form of pull requests (merge requests). It is important because this process is invaluable yet can stop the development for days. What is more; well organized commits and merges help future readers understand what happened (that is you!).

Nature of software development makes it hard to share the context for every single line of code to other developers, yet we do ask for a thorough review. If a pull request is abhorring to the reviewer, the review gets delayed, or not read (yet approved). A quick example of abhorring pull request can be one that contains changes in 100 files. More sophisticated example is one that consists of a number of conceptual changes, like

- Moving files to a different package,

- Adding logging utilities,

- Applying the utilities in 8 classes not related to the feature development,

- Feature A

I did that in the past and do my best to not do that anymore, because I also review code a lot. If a PR is not well structured it can take more than an hour to review it.

Conceptual changes

The idea of conceptual changes is basically the Single Responsibility Principle applied to Pull Requests.

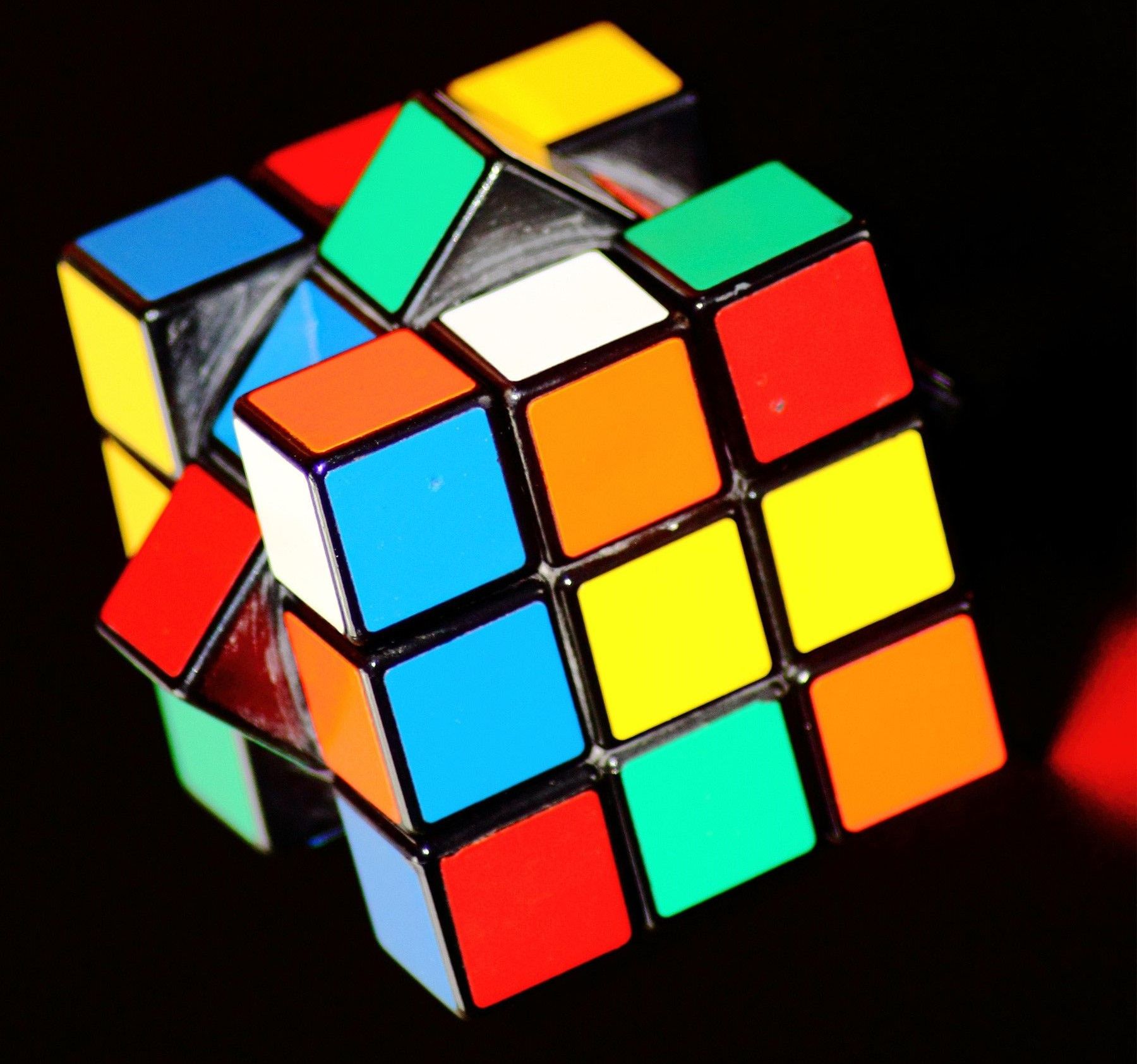

A conceptual change does not equal a feature, nor a change set (or a single commit) from a git perspective. When I think about it, I picture a Rubik cube. When you are solving this puzzle, every move is a change in a single dimension that leaves the cube in a stable state. By stable state I mean: next move is possible, the cube is not broken. I didn’t say that every dimension is correct after a single move.

What does it mean in an engineering context? There are multiple dimensions in play; business features, architecture, clean code, deployment and every change has to leave the system in stable state, there are no failed tests, code compiles, no OWASP check fails, … but first and foremost the code can be deployed to production.

An example would be the first user related microservice in the system. One way to approach it could be to build a big change set consisting of

- Deployment script (like helm file)

- User registration API

- Integration with email service

- User password reset API

- Personal detail change API

- Merge

The “Rubik cube” approach would split it into:

- User registration API

- Merge

- Integration with email service

- Merge

- Deployment script

- Merge

- User password reset API

- Merge

- Personal detail change API

- Merge

Every bullet here is a change in a single dimension only, and every conceptual change is merged to the main branch. There are a few benefits stemming from this approach:

- Pull requests are smaller, easier to read

- Constant merge with main branch, causes smaller amount of conflicts and risk of growing long living, or soon to be dead branches

- If there is a priority change between “password reset” and “personal detail change” steps, there are already 3 out of 5 conceptual changes finished. Remaining 2 can be discarded, or postponed, but a subset of the work can be used.

- A bonus is: it feels great to merge a couple times a day. There is a sense of accomplishment in it

The cool thing about this is that it is in line with another “best practice” called trunk based development.

A careful reader noticed that I have not mentioned any tests here. Tests are the way to make sure the cube is not broken, thus an integral part of any change. In the example above “User registration API” means both tests and code that is responsible for it. Personally I am a fan of Test Driven Development. It forces developers to think about the expected outcome, rather than implementation. However I appreciate that sometimes preparing the whole infrastructure for tests can get very expensive.

A stable cube

A critique can say that the approach is basically merging and deploying unfinished business features. I agree! I just think that it is not a big deal, if it does not have an impact on users. A comment for engineers who are working on MVP: no users = no impact 🙂

For others: there are two approaches that I successfully used in the past to avoid system impairment for users, while continuously pushing code to production:

- Feature flags - a mechanism to “disable” a feature for all customers, or a group of users on a production environment. There are existing tools for that, some of them are listed here, but there are more, e.g. spring profiles. In the worst case, environment variables can be used to open or close features with a few lines of custom code

- Manage by deployment - sooner or later there is a need to buffer changes going to the production environment. A team can merge and deploy to a test environment whatever they like, however before moving stuff to production the team can decide if the changes are ready for customers.

A team can decide that the main branch is sacred and only pure, tested with >95% coverage, approved code goes there. Alternatively it can decide to choose speed of development and implement for example the Rubik cube approach by focusing on merging and publishing small conceptual changes to production as soon as possible.

Devil’s advocate

To play the devil’s advocate, the proposed approach is not suitable for every scenario. For example in a strictly regulated environment, there might be a need for a more sophisticated approach.

From a different point of view, the proposed approach emphasizes the speed of development, that can get chaotic, so there is a need for an oversight and general roadmap.

Summary

The article covers a (not the) way for a fast development. It is an effect of compilation of known practices, for example trunk based development, feature flags, and personal experience.

As a founding engineer I am passionate about bringing value to the customers, building products and helping other engineers do the same. This is why in the following articles I will go through the process of building a theoretical MVP step by step. I do intend to cover some theory like SOLID, naming conventions etc, however it’s going to be through practice. Hopefully at the end of the road, a reader will be able to pursue his own business ideas and codify them.

References