Decision utils (utilities) Part #1

In the previous article I covered the impact on the decision making on individuals and teams. Initially I have been planning to list a number of decision frameworks, so a reader can pick and choose. However after some research I found that there is a number of articles and videos covering a very robust decision making frameworks and I simply found that …to much…

I don’t think that a decision maker in a startup can realistically use frameworks like 7 steps to effective decision making for every single decision, or consult it with every team member in a democracy like manner. Of course that’s just my personal opinion 🙂 Instead of proposing a golden rule, or trying to build a new sophisticated framework, I decided that it would be more useful to collect a number of decision related “utils” with the aim of going broad rather than deep. The friendly bag of tools, can be used freely wherever seem fit, without commitment, or methodology.

For non-programming audience: every programming project ends up having utils (utility) package. It is a piece of code that is not directly related to the business logic, but provides some shared functionalities that are utilized across the code base.

Eisenhower matrix

Eisenhower matrix aims to help in task prioritization. It forces a user to evaluate a task by answering questions:

- Is it urgent?

- Is it important?

Then a task is put in the right box in the table, that looks like bellow. For each cell there is a suggested strategy on how a task should be approach.

| Strategy | Urgent | Less urgent |

|---|---|---|

| Important | Do it first | Schedule |

| Less important | Delegate | Don’t do |

Level 1 & 2 Decisions

This approach was eye opening for me. Initially introduced by Jeff Bezos. The idea here is to look at decisions from perspective of their reversibility. Irreversible decisions are type 1 and require thorough analysis, like consulting a senior member, or conducting a robust research. On the other hand type 2 decisions might have a significant impact, but can be undone.

For me, this approach is very pragmatic and takes into account real life. Firstly one cannot always wait for a company CEO to make a decision, because his first available slot might be in the next month. Secondly an employee can have a better view from the trenches, than a senior member from his strategic position for most mundane tasks. Thirdly nothing brings ownership to the table as well as an accountability of your own decisions.

A caveat here is that if every decision is type 1, than the process is broken. If everything is important, nothing is important, simple as that. It is not rare, that while introducing the approach, a person who was previously responsible for the decision making will struggle to “let go”, thus claiming 90% of decisions are type 1. It takes time to change the mindset and calibrate.

An example for engineering department can be an API design. The way the team structures internal API can be considered type 2 decision, because the team has full control over it and can reverse any change. However a public API can be considered a type 1 decision. Due to the fact that as soon as there are external users to an API, there is a need to uphold the contract, documentation etc, making it harder to change.

Easier said than done! It is hard to draw a line where type 2 becomes type 1, and it can be very subjective. I would claim that an organization deciding to incorporate this approach should remember on educating about the purpose of this tool and drawing some lines, so employees can start their calibration somewhere.

Comparison

Given a number of options to choose from, we have to compare them somehow to come to a conclusion. In the previous article we introduced the impact of the attribute alignability, and the presentation format on the process of decision making. In short, it is easier for people to make a decision, where choices are nicely aligned and differences easily comparable. People like colorful tables, or spreadsheets. It is worth remembering, especially when presenting options to C-level executives 🙂

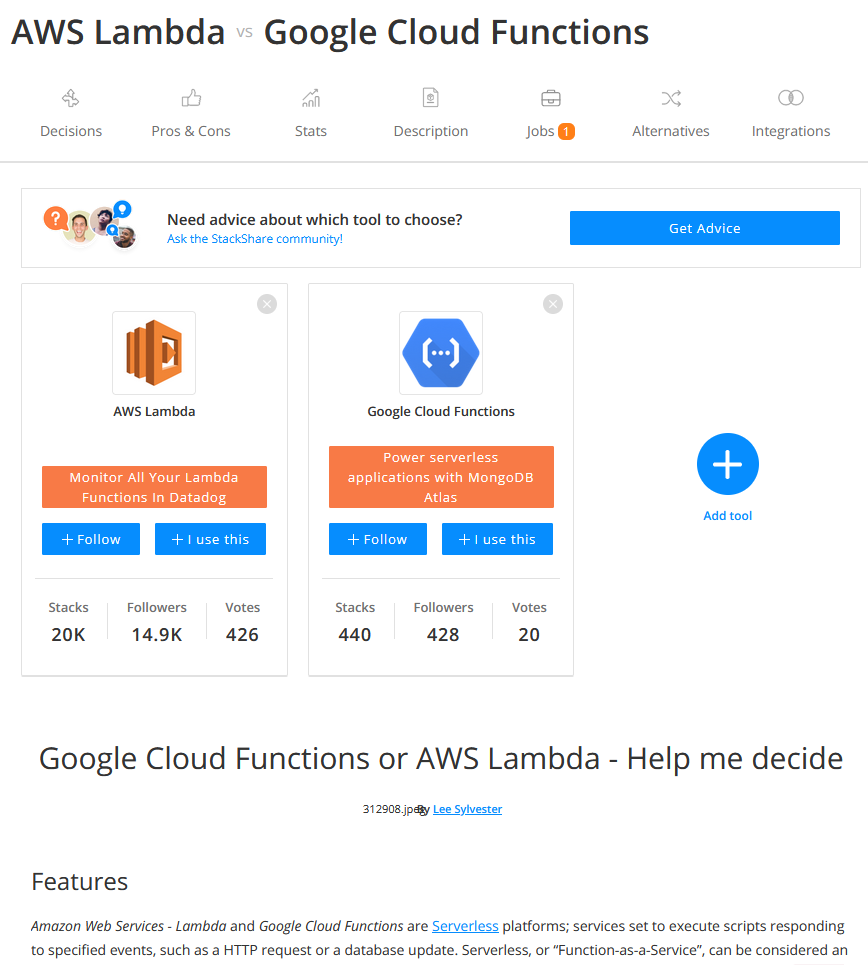

Luckily for us, there are products aiming to address this problem. For example stackshare.io, quick look at AWS Lambda vs Google Cloud functions, looks like:

giving us some nice, visual differentiation in basic statistics, while next sections in this case cover features, performance and testing. Common traits for both tools.

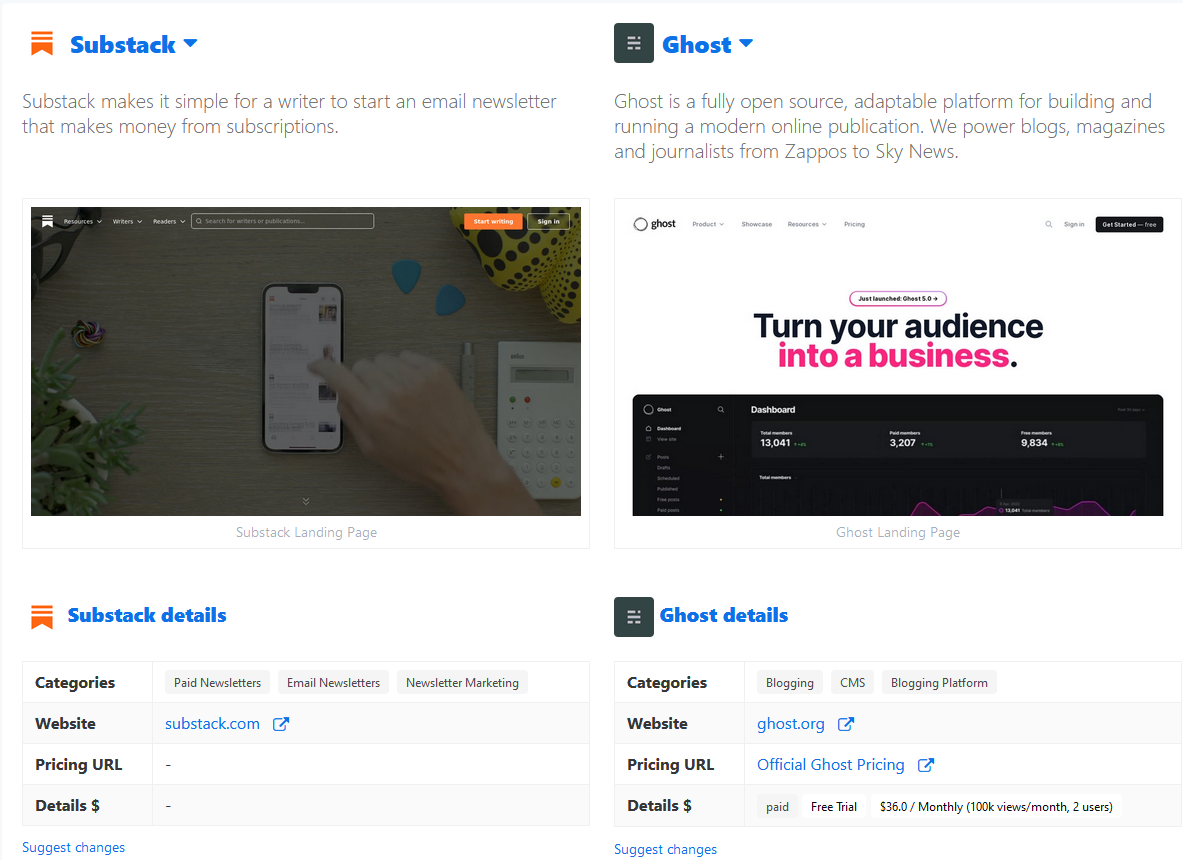

Another example is saashub. A quick comparison between two platforms: substack vs ghost looks like this:

In later sections, there are also videos, category popularity, reviews, social recommendations and mentions. Followed by a list of alternatives.

This article is not sponsored, I just found those two services by typing X vs Y in the browser. There is a number of other services and bloggers doing a similar job. (If there are any other tools worth mentioning, please feel free to comment on my Facebook page, or send me an email, I am happy to extend this list)

What I am trying to say here is; some comparison work has already been done and a decision maker can utilize it. Although it is worth to check when the comparison was last updated and by whom. Obviously every product company tries to differentiate itself from it’s competitors. More than once I have seen such comparisons obsolete, it’s like saying “our product is better than X, cause X does not have Y”, but Y did the homework and X.2 has it.

Tech stacks

While picking a technology stack for the project, it is worth to mention some well known stacks. There is a benefit in using those compositions, as there are communities and professionals being well versed with the integration. Some of the popular stacks are:

- ELK - Elasticsearch, Logstash, and Kibana

- LAMP - JavaScript, Linux, Apache, MySQL and PHP

- LEMP - JavaScript, Linux, Nginx, MySQL and PHP

- MEAN - JavaScript, MongoDB, Express, AngularJS and Node.js

- Ruby on Rails - JavaScript, Ruby, SQLite and Rails

- PERN - Postgres, Expres JS, React, Node

- Django - JavaScript, Python, Django and MySQL

Organization accountability

More than once:

- I was a witness to an error on a production system,

- My work was the reason for that,

- I have seen all responsibility being pushed to an engineer (a person, not department)

Being a few years in the industry, I came to a realization, that it is rarely purely an engineer’s fault. I am not trying to justify myself here. Trust me, I am quite self-conscious of my mistakes. However an error to deliver, or an error on production system is often caused by the way the whole organization works, rather than a single person (although I am not saying it never happens). For example when there is a huge push to deliver something fast, there is an increased risk of missing something during the QA phase. Or in a scenario where there is a bespoken feature request, but never tested by people presenting it to a customer, let’s be honest the odds of the demo going well, are not the highest.

What does it have to do with decision making? In previous article we covered impact of level of construal (distance) on the process of the decision making. Basically if an employee is chased down and judged, he/she will grow a reluctance to make a decision, or step forward. This lack of psychological safety can cause drastic decrease in the performance and satisfaction of an employee.

Summary

I decided to split the topic in parts, to keep the article size manageable. If there is something useful that you think I should cover in future, I invite you to drop a comment on my Facebook profile